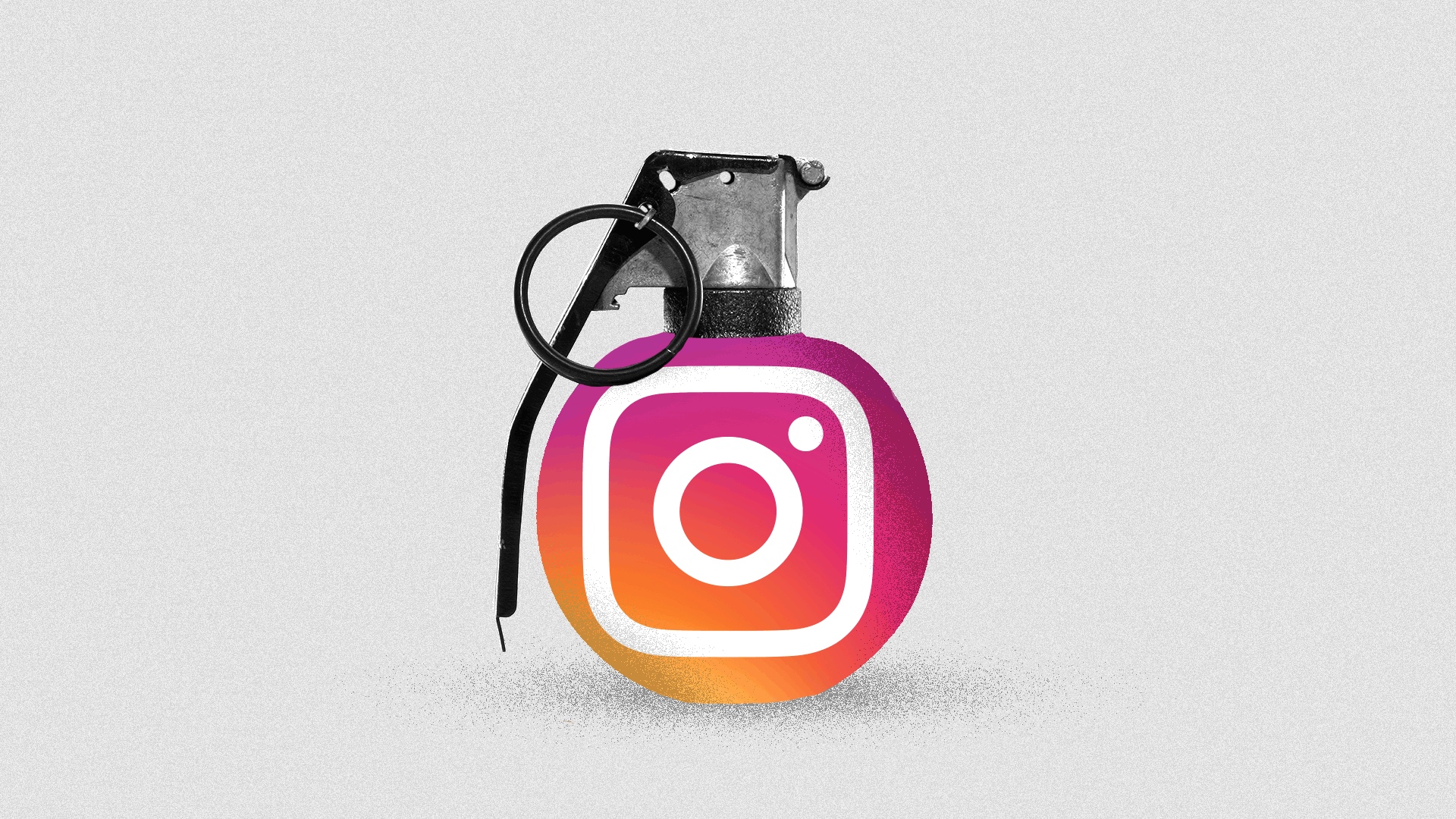

Facebook and Twitter received the lion’s share of attention in connection with Russia’s election interference in 2016. But Instagram, the photo- and video-posting platform, was more important as a vehicle for disinformation than is commonly understood, and it could become a crucial Russian instrument again next year.

Instagram’s image-oriented service makes it an ideal venue for memes, which are photos combined with short, punchy text. Memes, in turn, are an increasingly popular vehicle for phony quotes and other disinformation. Deepfake videos are another potential danger on Instagram. Made with readily available artificial intelligence tools, deepfakes seem real to the naked eye and could be used to present candidates as saying or doing things they have never said or done.

There’s more to worry about than just Instagram. As I explain in a new report published by the New York University Stern Center for Business and Human Rights, the Russians may not be the only foreign operatives targeting the US. Iranians pretending to be Americans have already jumped into the US disinformation fray. And China, which has deployed English-language disinformation against protesters in Hong Kong, could turn to the US next.

In terms of sheer volume, domestically generated disinformation – coming mostly from the US political right, but also from the left – will probably exceed foreign-sourced false content. One of the conspiracy theories likely to gain traction in coming months is that the major social media companies are conspiring with Democrats to defeat Donald Trump’s bid for re-election.

Whoever is spreading disinformation meant to rile up the American electorate, Instagram will almost certainly come into play. Started in 2010, it was acquired by Facebook 18 months later for $1bn. Today, Instagram has about 1 billion users, compared to nearly 2.4 billion for Facebook, 2 billion for YouTube (which is owned by Google), and 330 million for Twitter.

In 2016, the Internet Research Agency (IRA), a notorious Russian trolling operation, enjoyed more US user engagement on Instagram (187m ), than it did on any other social media platform (Facebook 77m and Twitter 73m), according to a report commissioned by the Senate intelligence committee and released in December 2018. Other observers have noted that, beyond Russian interference, domestically generated hoaxes and conspiracy theories are thriving on Instagram.

Instagram is a hotbed for disinformation dissemination, Otavio Freire, chief technology officer and president of SafeGuard Cyber, a social media security company, told me. The visual nature of content makes it easier to stoke discord by speaking to audiences’ beliefs through memes. This content is easy and inexpensive to produce but more difficult to factcheck than articles from dubious sites.

Facebook belatedly is trying to filter out some of the muck found on Instagram. In the past year, hundreds of Instagram accounts have been removed for displaying what Facebook calls coordinated inauthentic behavior.

In August, Facebook announced a test program that uses image-recognition and other tools to find questionable content on Instagram, which is then sent to outside fact-checkers that work with Facebook. In addition, Instagram users now for the first time can flag dubious content as they encounter it. The platform has made it easier for users to identify suspicious accounts by disclosing such information as the accounts’ location and the ads they’re running.

But Facebook and Instagram could do more. Content that factcheckers deem to be false is removed from certain Instagram pages but not taken down altogether. In my view, once social media platforms carefully determine that material is provably false, it ought to be eliminated so that it won’t spread further. Platforms should retain a copy of the excised content in a cordoned-off archive available for research purposes to scholars, journalists and others.

Another problem is that Facebook and the other major social media companies have allowed responsibility for content decisions to be dispersed among different teams within each firm. To simplify and consolidate, each company should hire a senior official who reports to the CEO and supervises all efforts to combat disinformation.

Finally, the platforms should cooperate more than they do now to counter disinformation. Purveyors of false content, whether foreign or domestic, tend to operate across multiple platforms. To rid the coming election of as much disinformation as possible, the social media companies ought to emulate collaborative initiatives they have used to stanch the flow of child pornography and terrorist incitement.

Fair elections depend on voters making decisions informed by facts, not lies and distortions. That’s why the social media companies must do as much as possible to protect users of Instagram and the other popular platforms from disinformation.

By Paul M BARRETT, deputy director of the New York University Stern Center for Business and Human Rights